Sub-Aperture Feature Adaptation in Single Image Super-resolution Model for Light Field Imaging

Aupendu Kar, Suresh Nehra, Jayanta Mukhopadhyay, Prabir Kumar Biswas

Department of Electronics and Electrical Communication Engineering

Indian Institute of Technology Kharagpur, India

Abstract

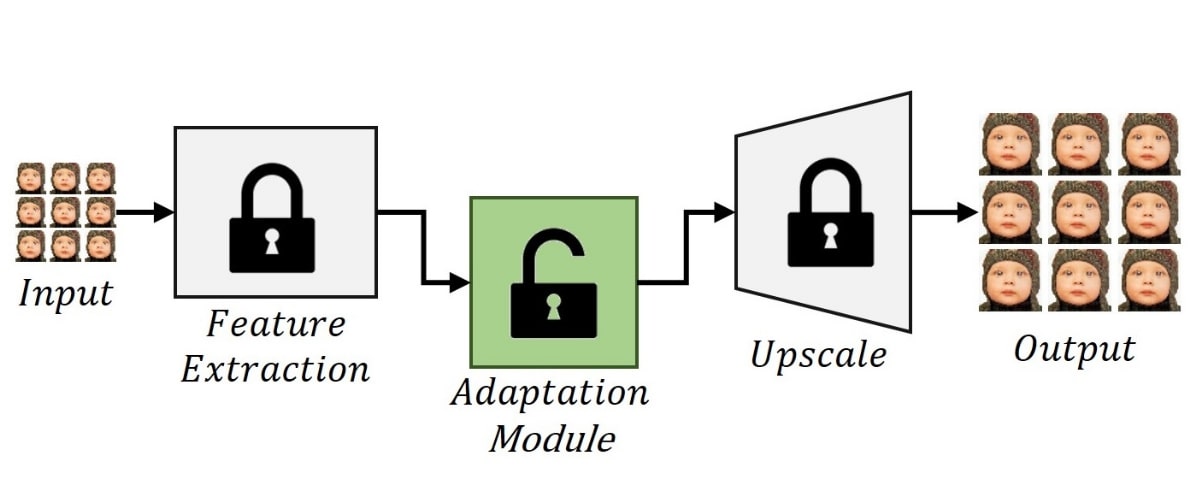

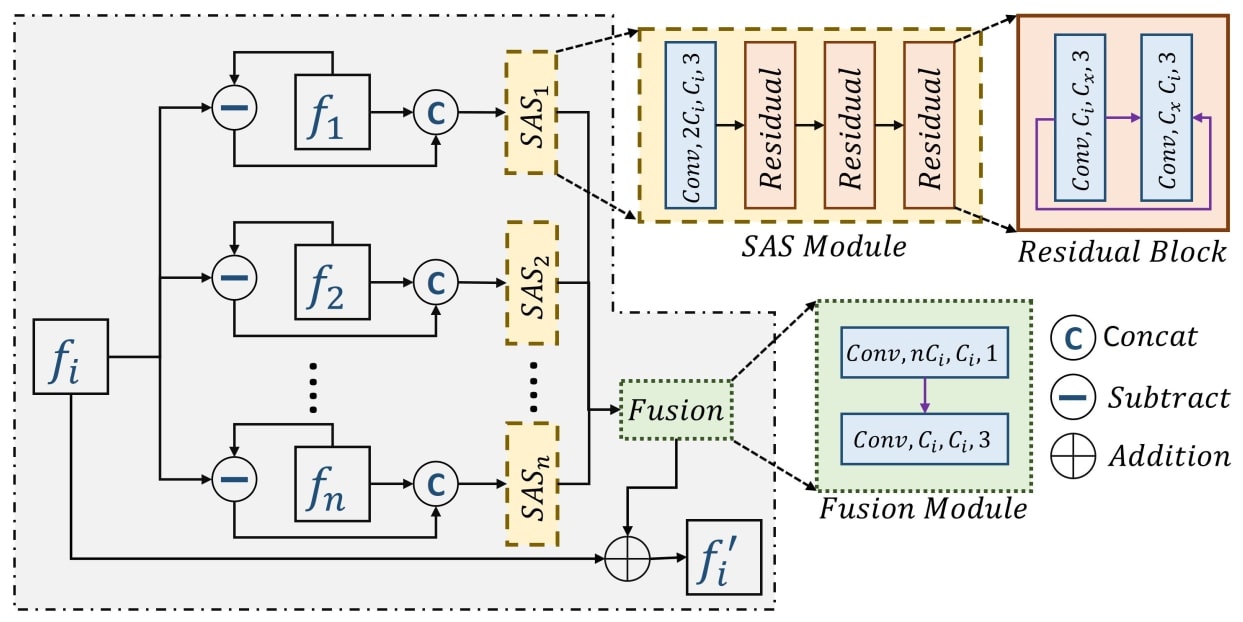

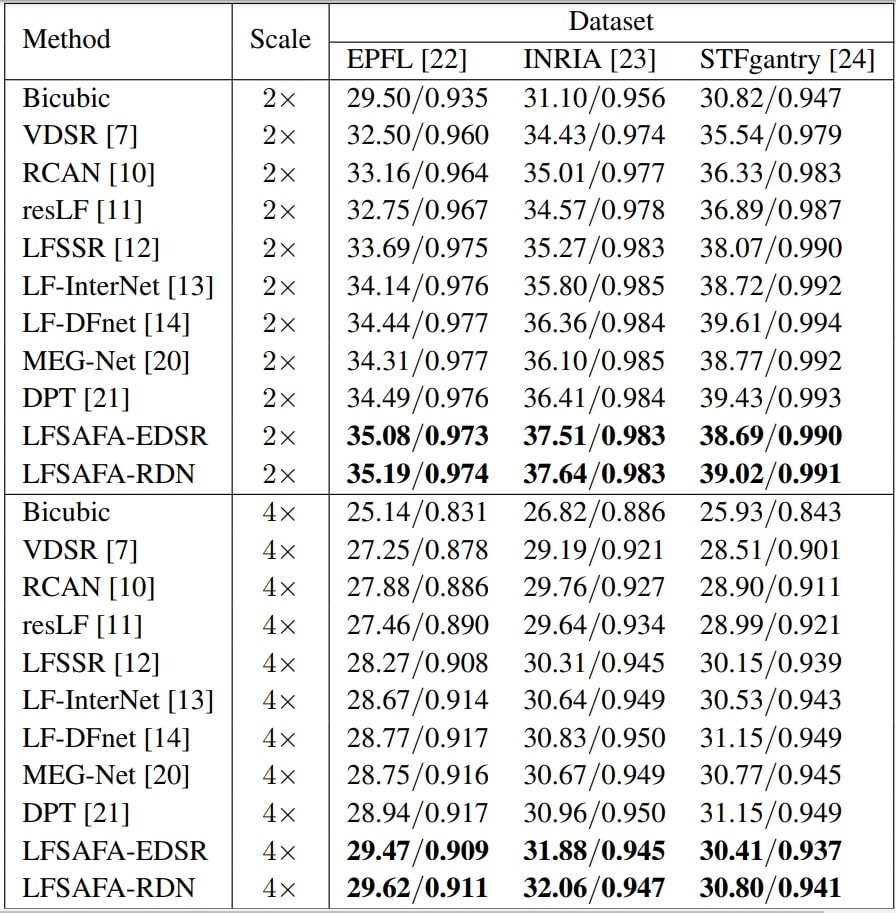

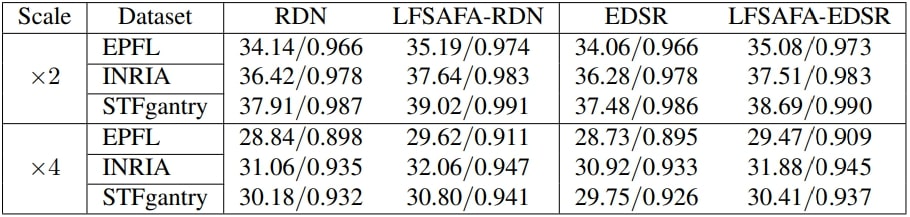

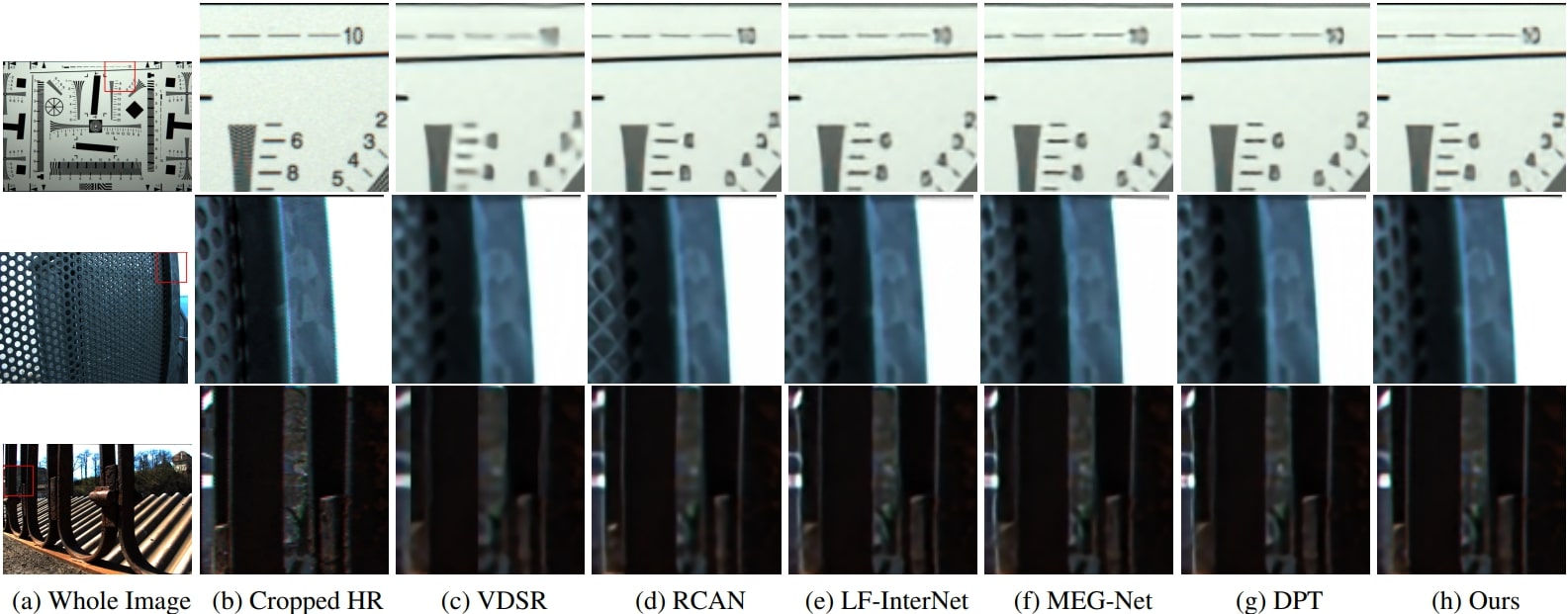

With the availability of commercial Light Field (LF) cam- eras, LF imaging has emerged as an up-and-coming tech- nology in computational photography. However, the spatial resolution is significantly constrained in commercial micro- lens-based LF cameras because of the inherent multiplexing of spatial and angular information. Therefore, it becomes the main bottleneck for other applications of light field cameras. This paper proposes an adaptation module in a pre-trained Single Image Super-Resolution (SISR) network to leverage the powerful SISR model instead of using highly engineered light field imaging domain-specific Super Resolution models. The adaption module consists of a Sub-aperture Shift block and a fusion block. It is an adaptation in the SISR network to further exploit the spatial and angular information in LF images to improve the super-resolution performance. Exper- imental validation shows that the proposed method outper- forms existing light field super-resolution algorithms. It also achieves PSNR gains of more than 1 dB across all the datasets as compared to the same pre-trained SISR models for scale factor 2, and PSNR gains 0.6 − 1 dB for scale factor 4.

Highlights

- We propose a light-field domain adaptation module to achieve LFSR using SISR models. To the best of our knowledge, this is the first work in this direction.

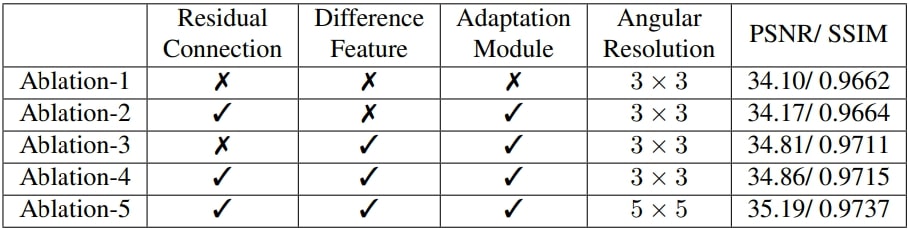

- We show that the proposed module can utilize angular information present in SA images to improve the per- formance, and ablation studies support our claims.

- Our qualitative and quantitative analysis shows that the performance of our method is better than light-field domain-specific super-resolution solutions, and any SISR models can adopt our proposed modification to make it work for LFSR.

Proposed Module

Results

Ablation Study

Visual Comparison

Download

References

- [7] Jiwon Kim, Jung Kwon Lee, and Kyoung Mu Lee, “Accurate image super-resolution using very deep convolutional networks,” in CVPR, 2016, pp. 1646–1654.

- [10] Yulun Zhang, Kunpeng Li, et al., “Image superresolution using very deep residual channel attention networks,” in ECCV, 2018, pp. 286–301

- [11] Shuo Zhang, Youfang Lin, and Hao Sheng, “Residual networks for light field image super-resolution,” in CVPR, 2019, pp. 11046–11055.

- [12] Henry Wing Fung Yeung, et al., “Light field spatial super-resolution using deep efficient spatialangular separable convolution,” IEEE TIP, vol. 28, no. 5, pp. 2319–2330, 2018.

- [13] Yingqian Wang, Longguang Wang, et al., “Spatialangular interaction for light field image superresolution,” in ECCV, 2020.

- [14] Yingqian Wang, Jungang Yang, et al., “Light field image super-resolution using deformable convolution,” IEEE TIP, vol. 30, pp. 1057–1071, 2020.

- [20] Shuo Zhang, Song Chang, and Youfang Lin, “Endto-end light field spatial super-resolution network using multiple epipolar geometry,” IEEE TIP, vol. 30, pp. 5956–5968, 2021.

- [21] Shunzhou Wang, Tianfei Zhou, Yao Lu, and Huijun Di, “Detail-preserving transformer for light field image super-resolution,” in AAAI, 2022.

Citation (BibTeX)

@misc{kar2022subaperture,>

title={Sub-Aperture Feature Adaptation in Single Image

Super-resolution Model for Light Field Imaging},

author={Aupendu Kar and Suresh Nehra and Jayanta Mukhopadhyay and

Prabir Kumar Biswas},

booktitle={2022 IEEE International Conference on Image Processing (ICIP)},

year={2022},

organization={IEEE}

}